Fix These 5 SEO Mistakes to Boost Site Traffic by 20% (in 2 Weeks)

SEO is an essential component of any digital marketing strategy and one of the best tools for boosting online visibility.

Unfortunately, many businesses are leaving money on the table by neglecting some of the most fundamental elements of SEO.

We’re not going to delve into link building, keyword research, or other ongoing SEO initiatives. Instead, this guide will highlight the 5 highest impact SEO errors that we’ve discovered over the years. Fixing these five SEO mistakes has the potential to boost your online customer base by 20% within 2 weeks.

1. Fixing Broken Backlinks

Fixing broken backlinks is the lowest hanging fruit for any site looking to increase its search engine traffic. For more established sites, broken backlink recovery alone will often increase traffic by 10%.

Broken backlinks are links to your site that take visitors to a broken page (or 404 error). In other words, one website tries to refer visitors to your website, but instead sends them to a page that doesn’t exist.

Broken backlinks not only lead to a negative experience for website visitors, they also discard most of the value of a direct endorsement from another website.

Finding Broken Backlinks

Start out by signing up for a trial Ahrefs.

Type your website domain into the search function and select “Broken” under the “Backlinks” section. You should see one of two things: a list of URLs that are linking to broken pages on your site, or a note saying “There are currently no broken backlinks in our index for the specified domain/URL.”

If you have no broken backlinks, nice work! For those that do have broken backlinks, it’s time to set up some 301 redirects.

For WordPress users, we recommend downloading the Redirection or Simple 301 Redirects plugin. Add the broken URL to the plugin, and identify a closely related page on your site that you could send people to instead.

Those working on a different platform will often need to set up these redirects in an .htaccess file. Make these changes with care. A small mistake in your .htaccess file can bring down your whole site. Always make sure to save a copy of the original file in case you make a mistake.

2. Site Speed

A fast website is essential for valuable search rankings, and site speed has a significant impact on website performance. Bounce rates increase by 50% if your site takes more than 2 seconds to load. In short, you’re turning customers away if you site is taking more than 1-2 seconds to load.

How to Test Site Speed

You can test your current site speed by installing Screaming Frog and running a crawl of your site. Any pages that take more than two seconds to load should be first priority.

Take the slowest pages on your site and run them through Google’s Page Speed Insights tool.

First priority should be to fix any items categorized under “Should Fix” on each of these pages. Some of the most common mistakes are:

- Browser caching not enabled

- Image file sizes are too large

- Failed to defer JavaScript from loading last

Improving site speed gets deeper into the technical side of SEO. Come explore our content marketing services if you find yourself overwhelmed by this.

Those with WordPress websites can download a host of plugins to help with these issues. Make sure to create a backup of your site in case any of these plugins cause issues with site performance.

For WordPress users, we recommend downloading TinyPNG for reducing image file sizes and WP Rocket for enabling browser caching.

3. Low-Quality Site Content

One of the most common SEO beliefs of the past decade is that publishing a lot of content is good for SEO. Publishing more content means more indexed pages, which means there are more ways for people to find you on search engines.

Frequently publishing content can be a great SEO strategy for sites that write time-sensitive material. However, this is not the most effective way for most sites to grow their online presence.

Neil Patel wrote a great article about this for anyone interested in learning more. For now, let’s get back to indexed pages.

Search engines rank webpages based on two main factors:

- How relevant their content is to a person’s search query

- How authoritative the website hosting that content is

Links from other reputable sites make your entire website more authoritative. Every new article has the potential to appear as the most relevant result for a person’s search query.

But, the value of each link to your site is dispersed across every page of your site. In other words, each new webpage dilutes the authority of your site.

Writing content about the search queries of your customers is great, but only if that content is useful. Otherwise, this low-quality content will hurt your search rankings.

How to Find Low-Quality Content

There are a few ways to find low-quality content on your site. First, log into Google Analytics, go into “Behavior”, select “All Pages”, and set the time range for the past 6 months.

You’re now looking at every page on your site that has received website traffic within the past 6 months. Sort these pages by bounce rate, and open up any pages with a bounce rate above 80%. High bounce rates are often an indicator of poor page quality.

How many of these pages are salvageable? Separate the pages that may need a facelift from those that should have never been published.

Take the pages that might need a revamp and create a 3-6 month plan to revise these articles. From there, focus on building links to these pages to maximize their ranking potential.

We’ll get into removing these pages in a minute, but start by categorizing these pages in an Excel doc.

Return to Google Analytics and sort all pages based on the number of pageviews within the past 6 months. Open up the 10-20% of pages on your site with the lowest number of pageviews. How many of these pages are salvageable? Again, categorize these pages in an Excel doc.

The final step is to add taxonomy pages (ex. categories, tags, etc.) to this list. The most common taxonomies are “/tag/”, “/category/”, “/author/”, and “/archive/”.

Note: there is a heated SEO debate about how to best handle taxonomy pages. We recommend keeping category and author pages if they currently drive any meaningful search traffic, while removing tag and archive pages.

How to Remove Low-Quality Content:

There are 2 steps to removing low-quality content and ensuring that it stays off of search engines:

- Set meta robots to noindex

- Ask Google to re-crawl these pages

Setting Meta Robots to Noindex

Meta Robots are a tag located on each page of your site that tells search engines like Google, Bing, and Yahoo how to treat your pages.

The most common meta robots tags are as follows:

- Noindex – tells search engines not to show this page in search results.

- Nofollow – tells search engines to ignore the links that this page links to (i.e doesn’t pass authority to linked pages)

- Index – tells search engines to show this page in the SERPs (this is the default setting)

- Follow – tells search engines to pay attention to all other pages that this page links out to

So how do I change meta robots?

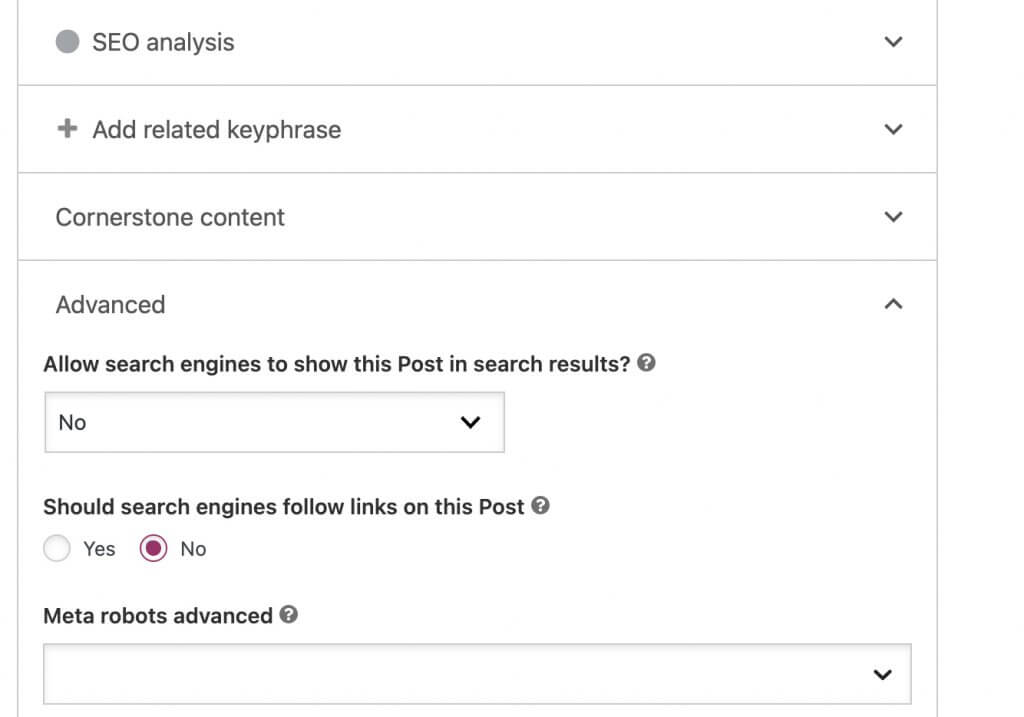

Open up each page on your site that you’re looking to deindex. Scroll down to the Yoast plugin and click “Advanced”.

For each page that you’re looking to deindex, change the settings to “no” for the question labeled “Allow search engines to show this Post in search results?”

Quick side note: if you haven’t already installed the Yoast plugin, do that now. This plugin gives your site basic SEO elements like title tags, meta descriptions, robots.txt files, and much more.

Removing Non-Existent Content

Removing content that no longer exists requires a slightly different process. Since the page no longer exists, we can’t update meta robots tags. Instead, we’re going to use 410 error messages.

410 error messages tell search engines that a page has been permanently deleted. Search engines will remove these pages from search results after uncovering this.

So how do I set up a 410?

The easy way for WordPress users to do this is through Yoast Premium. This tool makes it easy to set up 410 error messages on any URL.

For non-WordPress users and everyone who wants to avoid paying for Yoast, 410 error messages can also be set up in your .htaccess file.

Ask Google to Re-Crawl Your Site

After telling search engines to ignore this content, we need to make sure that search engines remove these pages from search results.

If you haven’t already, set up Google Search Console (formerly Google Webmaster Tools) for your website, and go into “Remove URLs”

From here, we’re going to enter each of these URLs and select “Temporarily hide page from search results and remove from cache”. This will temporarily remove the page from search results. When Google goes back to revisit these pages, the meta robots tag will tell Google to permanently remove these pages from search results.

Next, we’re going to remove each of the taxonomies from search results by selecting “Temporarily remove directory”.

Just like that, we’ve removed the low-quality content that is hurting your search rankings.

4. Duplicate Content

Contrary to what many believe, Google will NOT penalize your website for duplicate content. However, duplicate website content can have a negative impact on your search rankings.

As explained in this article from Moz, search engines do their best to filter out duplicate content by blocking everything other than the “original version” from search results.

Ok, so search engines block duplicate content. Who cares?

Remember that point about website authority being spread across every indexed page on your site? Duplicate content is no exception.

In other words, duplicate content dilutes the value of your most important website pages.

On top of that, many websites use the same website copy for every product or service page.

So what happens if Google sees these pages as being duplicates of one another? You may find your most valuable site pages filtered out of search results.

Duplicate Versions of Your Site

The biggest duplicate content issue is having multiple versions of your website on the internet.

You can test this by typing in http://www.yourdomain.com/, then typing http://yourdomain.com/ into a separate browser tab. Are the end URLs identical, or do you see a www. and non-www. version of your site?

If you see two separate webpages, type “site:yourdomain.com” into Google, and see which version appears in search results. Now, we’re going to redirect the other version to the one that currently appears in search results.

Again, this gets into the technical side of SEO, but those who feel confident can see exactly how to set up this redirect here.

5. Inaccurate Business Listings

Business signage has the potential to make or break your brand.

Directories like Google My Business, Yelp, and dozens of others are the online version of your business’ billboards.

Unlike traditional billboards, online directories are very easy to manipulate. Everything from data aggregators to mistaken customers has the potential to destroy your business’ online billboards.

Inaccurate business information not only has the potential to hurt your search rankings. It also has the potential to send customers to the wrong address or call the wrong number when trying to ask about your services.

Years can go by before businesses realize that inaccurate phone numbers or addresses have deterred hundreds of customers from finding them. Run a free scan today to ensure that your business appears correctly on the internet’s most influential directories.

SEO is a long-term game, but fixing these common SEO mistakes will lead to immediate gains in traffic and customers.

Interested in receiving digital marketing insights before your competitors? Sign up for the Intergrowth newsletter to be the first to learn about our marketing tactics.